In this blog, I would like to try answering the above question. One of the most critical parts of deep learning is discovering accurate hyperparameters. We are going to discuss the effects of batch size on the performance of Convolutional Neural Networks. Before answering the above question, I would like to explain the term batch size. The batch size is the number of training samples that will pass through the network during training to make a single update to the network parameters.

I will like to discuss a research paper that will give us deep insight into the relationship between training set batch size and the performance of CNN.

Impact of training Set Batch Size on the Performance of Convolutional Neural Network for Diverse Datasets

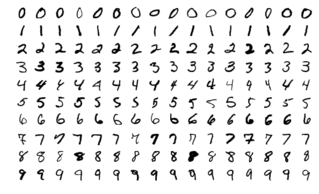

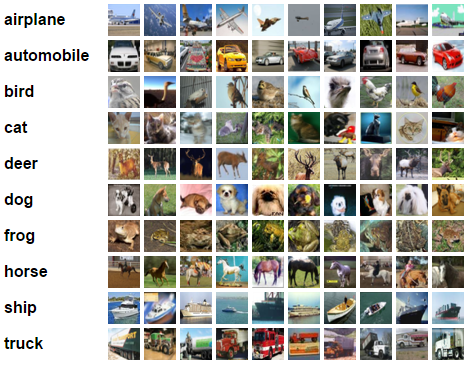

The benchmark classification image problem is conducted on the MNIST and CIFAR-10 datasets for estimating network training performance. The MNIST dataset consists database of handwritten digits from zero to nine. The CIFAR-10 datasets consists 60,000 32×32 color images in 10 different classes.

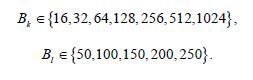

Inferencing the related works, two sequences of the batch size values were selected, namely number to the power of two and number multiple of ten.

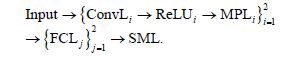

In addition to this, different CNN architecture was applied for each dataset. The initial hurdle was to examine the influence of batch size on the MNIST dataset. Consequently, a renowned architecture of CNN, called LeNet, was used.

Then to conduct the testing on the CIFAR-10 dataset. A neural network with five convolutional layers was used. In addition to this normalized layers were also added. The selected models were applied using the machine learning framework TensorFlow v.1.3.0.

The models are trained using SGD with a learning rate of 0.001 and 0.0001 for MNIST and CIFAR-10 datasets, respectively. The performance was evaluated as an average of over 5,000 and 10,000 iterations for the MNSIT and CIFAR-10 datasets, respectively, to optimize the training of the model.

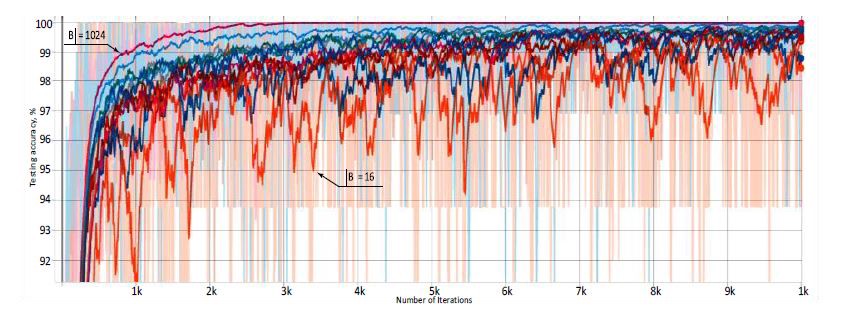

From Fig.1 we can conclude that the larger the batch size value, the more smooth the curve. The lowest and noisiest curve corresponds to the batch size of 16, whereas the smoothest curve corresponds to a batch size of 1024.

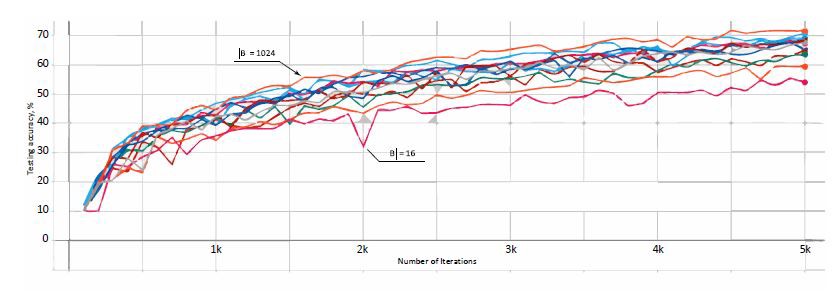

From Fig.2 we can observe that the smoothness of the curve is approximately the same for all the batch size values. The lowest curve corresponds to the batch size of 16, and the highest corresponds to the batch size of 1024.

Investigating the above two figures, we can conclude that the curves which describe testing accuracy results are noisy on MNIST datasets and smooth on the CIFAR-10 dataset. The curves vary from the batch size value of 16 to 1024.

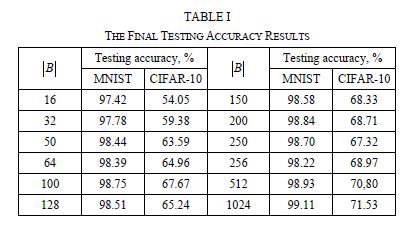

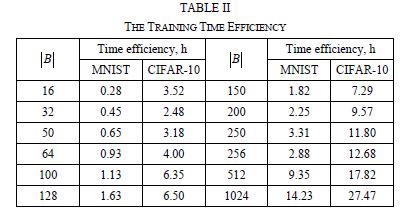

In addition to this, we can comprehend from Table 1 that the testing accuracy for both datasets increased when the batch size increased. Similarly, the training time efficiency is similar to the testing accuracy. Analyzing Table 2, we can infer that the higher the batch size value, the more time is required to train the network.

[1]: Impact of Training Set Batch Size on the Performance of Convolutional Neural Networks for Diverse DatasetsPavlo M. Radiuk Khmelnitsky National University, Ukraine.

[2]: MNIST Dataset

[3]: CIFAR-10 Dataset

[4]: TensorFlow